Automatic Dependency Updates with Renovate and GitLab

Most software projects use other projects/libraries as dependencies. And if we describe infrastructure as code we also depend on other things, e.g. specific versions of Terraform modules or Docker images. The best practice is to always specify dependencies as precise as possible to make the outcome of the build or deployment reproducible. When a codebase has many dependencies it can become a tedious task to keep track of all new releases of all dependencies and to keep the dependency definitions up to date (and tested). Wouldn't it be nice to automate this?

Dependabot 🤖

Users of GitHub probably know Dependabot. It is a bot that monitors GitHub repositories and creates pull requests if it detects that there are security updates for dependencies available. This is nice and for free but naturally doesn't work if the code isn't hosted on GitHub. The core code of Dependabot is even public, though it isn't open source. So the documentation and license are not really "run my own instance of it"-friendly.

Renovate 🖌

Luckily, there is an alternative to Dependabot: Renovate. Renovate is open source and can scan git repositories for all kinds of dependencies and create merge requests if new releases are available. Check this extensive list of supported package managers to see if Renovate could update your dependencies. Renovate is available as a hosted GitHub or GitLab app or we can run our own instance. For a selfhosted GitLab instance we have to run Renove ourselves as well. The official documentation shows all available configuration options but lacks examples on how to really run your own instance.

Renovate is not designed as a daemon that runs permanently and scans all configured repositories in a loop. It assumes that we run it at regular intervals and then it checks for dependencies once. This is nice, because we don't need a server that constantly runs Renovate, instead we can integrate it perfectly in a CI pipeline that runs Renovate as a regular job.

Credentials 🔑

First of all we need a few credentials. We login to our GitHub account and go to the developer settings. There we can create a personal access token, it doesn't need any permissions at all. Renovate will use this token to fetch the public changelogs of our dependencies. Without a token GitHub would rate limit us, so we need a token even if it is just for public information. We store the token in the environment variables of our pipeline settings in GitLab as GITHUB_COM_TOKEN.

Next we create a personal access token with api access in our GitLab account. We can either do this with our existing GitLab account or with a new one. Using the existing account has the disadvantage that all actions of Renovate will look like they have been carried out by us, this also means that we don't get any mails if Renovate creates new merge requests because GitLab doesn't send mails for "our own actions". Therefore, it makes sense to create a new "Renovate" account, invite it to our project and create a personal access token for the new account. Either way we also have to store the token in the environment variables of our pipeline as RENOVATE_TOKEN. Renovate will use this token to create branches and merge request in our git repository.

GitLab CI 🦊

Now lets add the following jobs to our .gitlab-ci.yml. With the tokens above that is already all we need, the next pipeline run will run Renovate for our repository. (But maybe read the config section below before you really run it.)

.renovate:

variables:

RENOVATE_GIT_AUTHOR: "${GITLAB_USER_NAME} <${GITLAB_USER_EMAIL}>"

RENOVATE_DRY_RUN: "false"

RENOVATE_LOG_LEVEL: "info"

image:

name: renovate/renovate

entrypoint: [""]

script:

- >

node /usr/src/app/dist/renovate.js

--platform "gitlab"

--endpoint "${CI_API_V4_URL}"

--git-author "${RENOVATE_GIT_AUTHOR}"

--dry-run "${RENOVATE_DRY_RUN}"

--log-level "${RENOVATE_LOG_LEVEL}"

"${CI_PROJECT_PATH}"

only:

- master

- schedules

Renovate:

extends: .renovate

GitLab CI Templates

The Renovate CI job is a good candidate to reuse in a lot of projects, so it makes sense to put the job definition in an external template and include it in all pipelines where we want to use it. This way modifications to the template will update all pipelines at once. Here is a short example to include the above template in another pipeline. Check out the docs to learn more about templates and includes.

include

- remote: https://gitlab.com/ekeih/ci-templates/-/raw/master/renovate.yml

Renovate:

extends: .renovate

renovate.json 💾

We put only very few options in the Renovate job template, this makes the job so universal that we can include it in every GitLab project we want. Project specific settings will go into the repository itself, in a renovate.json file. There are two ways to create this config file.

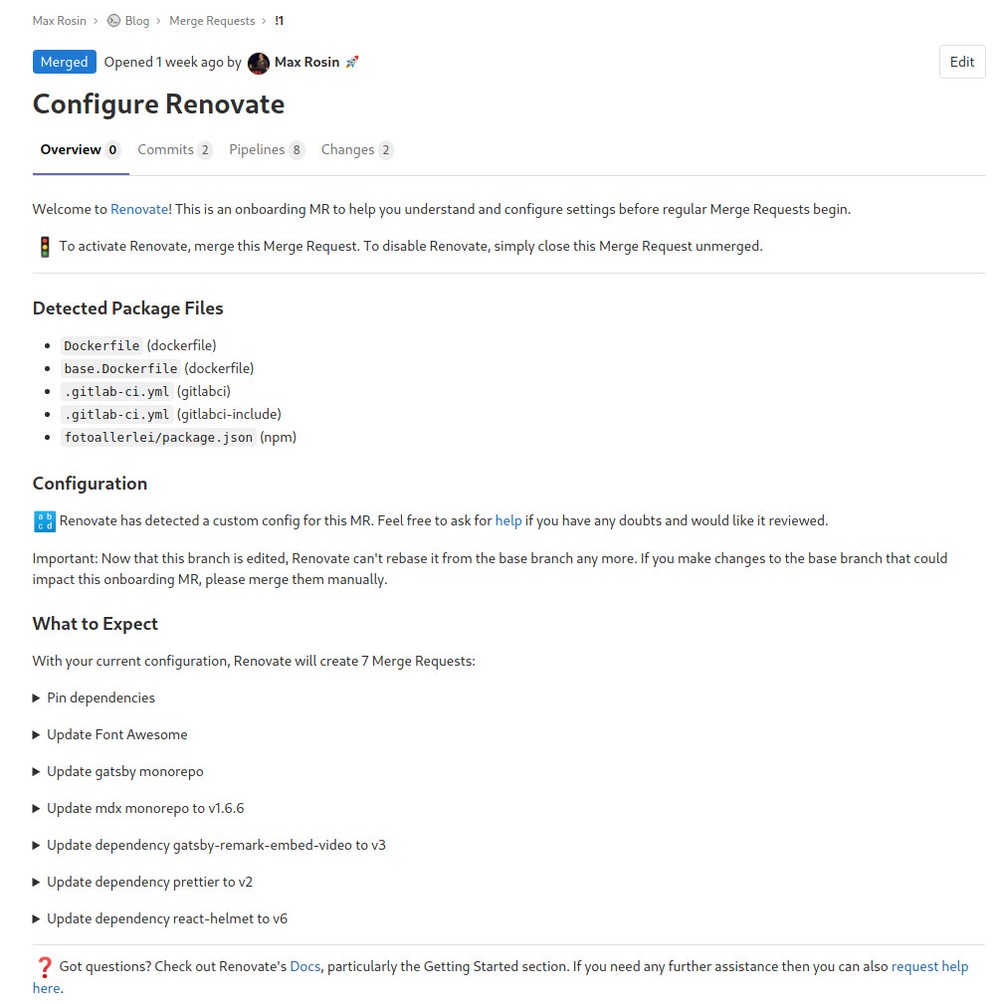

Onboarding Merge Request

If we just run the CI now Renovate will detect supported package managers in the repository and create an onboarding merge request as shown below in the screenshot. In this example we see that Renovate found two Dockerfiles, a .gitlab-cy.yml file, included templates in the .gitlab-ci.yml file and a package.json file in a subdirectory with NPM packages. Below the list of files Renovate shows us a preview of merge requests it will create if we merge the current configuration. The onboarding merge request creates an almost empty renovate.json file. If we want we can now push additional commits to the merge request to add settings to the renovate.json and run the pipeline again. During the next run Renovate will update the merge request based on the new settings and we can check the preview to make sure that it would do what we want. When we are happy with the preview we can merge the onboarding merge request and during the next pipeline run Renovate will create merge requests for outdated dependencies based on our settings.

Manual Creation

If we already know what settings we need we can skip the onboarding merge request by pushing a renovate.json file before Renovate runs for the first time. This is especially helpful when the repository doesn't contain any package manager files that Renovate can detect by default — in this case Renovate doesn't create an onboarding merge request itself. In this situation we need to create the renovate.json ourselves and tell Renovate how to find package manager files in our repository. Check out the upstream docs about fileMatch to find out more.

Example renovate.json

The repository of this blog currently uses the following renovate.json. The entries in extends are config presets provided by Renovate which is useful to get started with sensible defaults. All available presets are explained in the upstream documentation. If we would only extend the config:base preset Renovate would create only two merge requests per hour to avoid overloading the CI and avoid general noise by too many merge requests. By extending :prHourlyLimitNone we override this setting and Renovate will just create all merge requests at once. In the packageRules we disable updates for the NPM sharp package. The packageRules can be used to control the exact update behaviour for specific packages or groups of packages. For example, we can ignore a package, update patch and minor versions but ignore major versions, automerge specific packages, etc. It is definitely worth the time to check the documentation for all the available settings to get an idea of all the possibilities.

{

"$schema": "https://docs.renovatebot.com/renovate-schema.json",

"extends": [

"config:base",

":prHourlyLimitNone",

":rebaseStalePrs",

":label(renovate)",

":assignee(max)"

],

"packageRules": [

{

"packageNames": ["sharp"],

"enabled": false

}

]

}

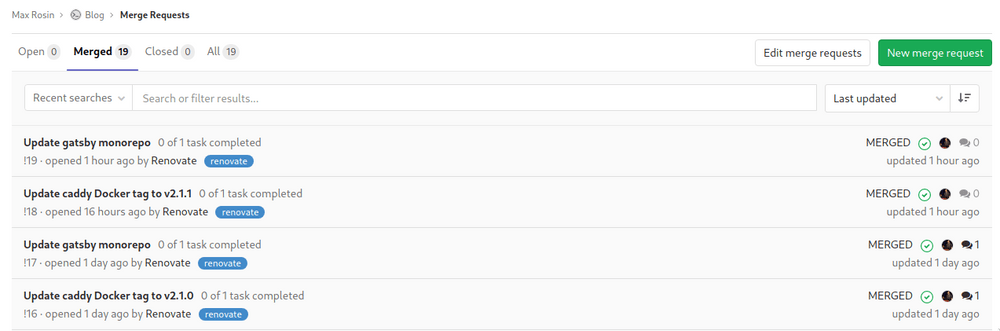

Two interesting things about the config:base preset are that Renovate will try to pin all our Javascript dependencies to specific versions and that Renovate is able to group several updates in a single merge request. The Renovate docs have a good explanation on the topic of version pinning. Grouping updates is useful for projects with monorepos, e.g. Gatsby ships a bunch of packages from one repo and Renovate has presets that are aware of known monorepos to put all updates from a monorepo in a single merge request. For this blog this often means one merge request instead of 19.

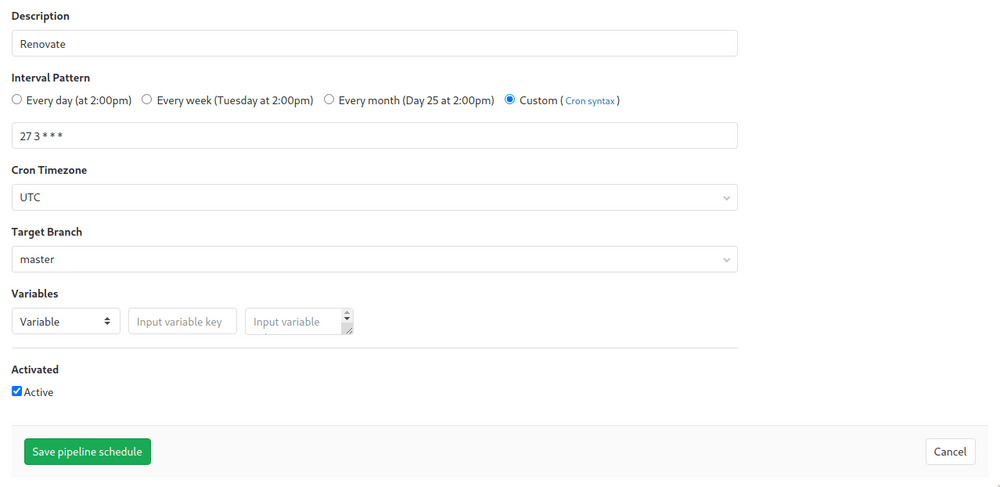

Pipeline Schedule 🔁

So far we configured our GitLab CI to run Renovate each time the pipeline runs for our master branch. But what if we don't commit something to this respository for a while? Then the pipeline never runs and we will never get merge requests for available dependency updates. To solve this we can configure GitLab to run our pipeline on a schedule. In the screenshot below we configure the pipeline to run once a day. This way we ensure that Renovate runs at least every 24 hours and we will get merge requests in time.

One thing to keep in mind is that we need to exclude all other jobs in our pipeline from running during the scheduled run. This is possible with except in our .gitlab-cy.yml:

build:

script:

- echo hello world

except:

- schedules

Conclusion 🏁

By setting up Renovate to run every day and each time we modify the master branch we ensure that we receive merge requests for all available updates of dependencies in time. We can configure the details of the updates on a very fine-grained level. This frees us from the burden to manually track releases of our dependencies and avoids security issues due to missed updates. Due to the merge request model we can still review changes manually to have full control over changes to our codebase. To sum it up: If you have a software projects with dependencies and are tired of tracking them, setup Renovate and your life will be easier.